Tips for guiding customers on their AI journey

A first step to being a credible and trusted advisor to customers seeking guidance on future AI investments is not telling them to distrust what they’ve seen with their own eyes. While it may be true that artificial intelligence and AI agents are set to revolutionize knowledge work the way industrialization revolutionized physical work in past centuries, it’s quite likely that your customers’ early experimentations with AI have not foreshadowed that type of transformation.

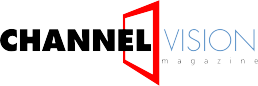

According to research out of the Massachusetts Institute of Technology’s Project NANDA (Networked Agents and Decentralized Architecture), the vast majority of organizations that have piloted gen AI have seen no measurable impact on P&L statements. Only a small fraction of organizations has moved beyond experimentation to achieve meaningful business transformation. Despite all the hype and the $30 to $40 billion in enterprise investment into gen AI so far, only 5 percent of initiatives delivered measurable business returns at six months post-pilot, exposing what MIT researchers dubbed a “widening Gen AI Divide.”

McKinsey & Co. researchers, for their part, refer similarly to a “gen AI paradox,” whereas nearly eight in 10 companies have deployed gen AI in some form, but roughly the same percentage report no material impact on earnings. What’s more, only 1 percent of enterprises recently surveyed by McKinsey view their gen AI strategies as mature.

“For all the energy, investment and potential surrounding the technology, at-scale impact has yet to materialize for most organizations,” said McKinsey.

This divide, proclaimed MIT researchers, does not seem to be driven by business model, regulatory implications, industry vertical or company size. Rather, “the divide stems from implementation approach,” they continued. In other words, partners can use the lessons learned from the 300 public implementations studied by MIT to guide customers across or out of the divide as they experiment with gen AI or transition into the next wave of AI technologies.

A Wide Divide

Certainly, gen AI tools such as ChatGPT and Copilot have been widely adopted, with more than 80 percent of organizations surveyed having explored or piloted them, and nearly 40 percent reporting deployment. But these tools primarily enhance individual productivity, showed the MIT study, not P&L performance.

“Despite high-profile investment, industry-level transformation remains limited,” said MIT researchers. “GenAI has been embedded in support, content creation and analytics use cases, but few industries show the deep structural shifts associated with past general-purpose technologies such as new market leaders, disrupted business models or measurable changes in customer behavior.”

All the while, the more customized, enterprise-grade systems “are being quietly rejected,” said MIT researcher. While 60 percent of organizations evaluated such tools, only 20 percent reached pilot stage, and just 5 percent reached production. Most fail due to “brittle workflows, lack of contextual learning and misalignment with day-to-day operations.”

McKinsey shared similar findings. It cited an imbalance at the heart of its paradox between widely deployed “horizontal” copilots and chatbots, which have scaled quickly but deliver diffuse, hard-to-measure gains, and more transformative “vertical” or “function-specific” use cases, of which about 90 percent remain stuck in pilot mode.

According to the MIT data, resource intensity had little bearing on success. Large enterprises, defined as firms with more than $100 million in annual revenue, lead in pilot count and allocated more staff to AI-related initiatives. Yet these organizations report the lowest rates of pilot-to-scale conversion. Interestingly enough, smaller and mid-market firms, which tend to move faster and more decisively, tended to have more success with gen AI. These top performers reported average timelines of 90 days from pilot to full implementation. Large enterprises, by comparison, took nine months or longer, showed MIT’s findings.

Similar findings emerged among most vertical studied, as well. Of the nine industry segments studied, only tech and media (which often prioritize marketing, content and developer productivity) showed clear signs of structural change.

Why a Divide

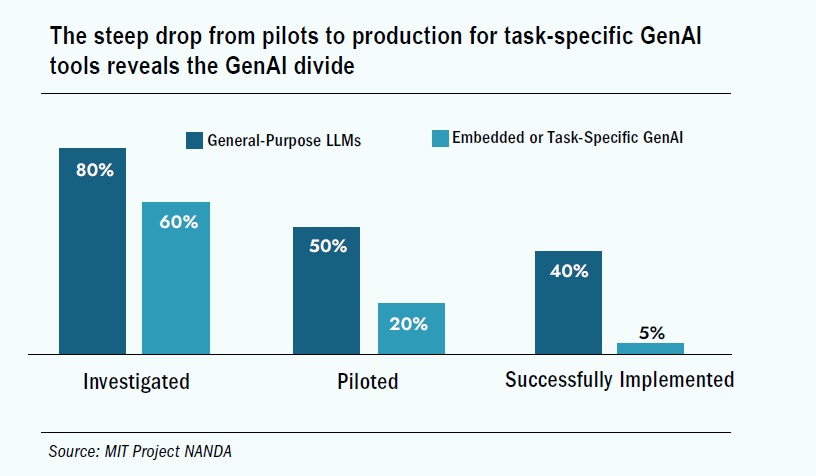

If you ask employees directly why their companies get stuck on the wrong side of the divide, you’ll hear some familiar complaints that come with emerging technologies, such as lack of executive buy-in and resistance to change. MIT analysis of implementations, however, suggested organizations aren’t investing in the right places. In terms of functional focus, investment in gen AI tools is heavily concentrated, said MIT researchers, with sales and marketing functions capturing approximately 50 to 70 percent of AI budget allocation across organizations surveyed.

In general, front-office tools such as those for sales and marketing get the attention because their outcomes are highly visible, impacts are measured easily and the gains are board-friendly. Metrics such as demo volume or email response time, for instances, align directly with board-level KPIs, said MIT. While bias reflects easier metric attribution, “some of the most dramatic cost savings we documented came from back-office automation,” said MIT researchers. “While front-office gains are visible and board-friendly, the back-office deployments often delivered faster payback periods and clearer cost reductions.”

Organizations that focused AI investment on back-office functions such as legal, procurement, operations and finance experienced more substantial, although more subtle, efficiencies such as fewer compliance violations, streamlined workflows or accelerated month-end processes. Real cost savings came when organizations were able to replace BPOs and external agencies with AI-powered internal capabilities – and not from cutting internal staff.

“This investment bias perpetuates the GenAI Divide by directing resources toward visible but often less transformative use cases, while the highest-ROI opportunities in back-office functions remain underfunded.”

A similar scenario emerges surrounding investment in general versus specific tools. Consumer-grade tools such as ChatGPT and Copilot are widely used and widely praised among respondents for their flexibility, familiarity and immediate utility. At the same time, generic tools such as LLM chatbots appear to show high pilot-to-implementation rates of more than 83 percent.

But again, these tools were used to make changes that were more visible than transformative, said MIT researchers, largely applied to quick tasks while leaving complex projects requiring customization or sustained attention to humans. Yet users of consumer-grade and generic gen AI tools were “overwhelmingly skeptical of custom or vendor-pitched AI tools,” said the MIT study, describing them as brittle, overengineered or misaligned with actual workflows.

“Users prefer ChatGPT for simple tasks but abandon it for mission-critical work due to its lack of memory,” said the study.

Herein lies what MIT researchers called the “learning gap” that is the primary factor keeping organizations on the wrong side of the gen AI divide: static tools that don’t learn and can’t evolve, integrate poorly into workflows and fail to deliver context.

“The core barrier to scaling is not infrastructure, regulation or talent. It is learning,” stated MIT researchers. “Most GenAI systems do not retain feedback, adapt to context or improve over time.”

A corporate lawyer at a mid-sized firm exemplified this dynamic. Her organization invested $50,000 in a specialized contract analysis tool, yet she consistently defaulted to a $20-per-month general-purpose tool for drafting work.

“Our purchased AI tool provided rigid summaries with limited customization options. With ChatGPT, I can guide the conversation and iterate until I get exactly what I need” this lawyer told MIT researchers. “The fundamental quality difference is noticeable, ChatGPT consistently produces better outputs, even though our vendor claims to use the same underlying technology.”

Yet the same lawyer who favored ChatGPT for initial drafts drew a clear line at sensitive contracts: “It’s excellent for brainstorming and first drafts, but it doesn’t retain knowledge of client preferences or learn from previous edits. It repeats the same mistakes and requires extensive context input for each session. For high-stakes work, I need a system that accumulates knowledge and improves over time.”

In other words, users appreciate the flexibility and responsiveness of consumer LLM interfaces but require the persistence and contextual awareness that current tools cannot provide, said MIT.

“Our data reveals a clear pattern: the organizations and vendors succeeding are those aggressively solving for learning, memory and workflow adaptation, while those failing are either building generic tools or trying to develop capabilities internally,” argued MIT.

Agentic AI systems, which specifically maintain persistent memory, learn from interactions and can autonomously orchestrate complex workflows, directly address the learning gap that defines this gen AI divide. This can be seen in customer service agents that handle complete inquiries end-to-end, financial processing agents that monitor and approve routine transactions, and sales pipeline agents that track engagement across channels demonstrate, said MIT. In the meantime, an analysis of buyers and organizations that successfully crossed the gen AI divide provides advisors with information to guide customers as they enter the realm of AI or shift to the next wave of AI technologies.

Getting Across

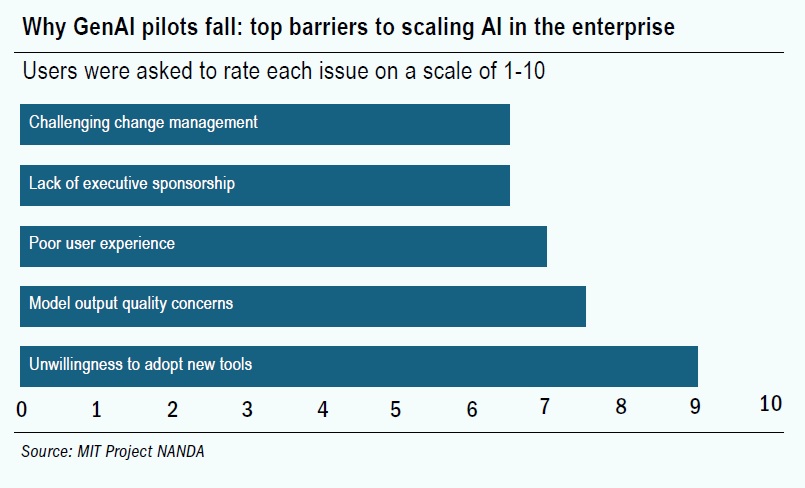

For starters, organizations that successfully crossed the divide approached AI procurement differently. Top buyers acted less like SaaS customers and more like clients for business process outsourcing (BPO), holding vendors to benchmarks as they would a consulting firm or BPO. These organizations, said MIT researchers, demanded deep customization aligned to internal processes and data; benchmarked tools on operational outcomes, not model benchmarks; and partnered through early-stage failures, treating deployment as a co-evolution.

“The most successful buyers understand that crossing the divide requires partnership, not just purchase,” said the report.

Likewise, strategic partnerships achieved a significantly higher share of successful deployments than internal development efforts. Although researchers observed far more “build” initiatives than “buy” initiatives in their sample, success rates favored external partnerships. Pilots built via strategic partnerships were twice as likely to reach full deployment as those built interally, while employee usage rates were nearly double for externally built tools.

“[P]artnerships often provided faster time-to-value, lower total cost and better alignment with operational workflows,” argued the report. “Companies avoided the overhead of building from scratch, while still achieving tailored solutions. Organizations that understand this pattern position themselves to cross the GenAI Divide more effectively.”

Successful organizations also tended to decentralize the sourcing of AI initiatives, relying on a type of bottom-up sourcing versus a central lab. Rather than relying on a centralized AI function to identify use cases, winners allowed individual contributors, budget holders and team managers to surface problems, vet tools and lead rollouts.

“Many of the strongest enterprise deployments began with power users, employees who had already experimented with tools like ChatGPT or Claude for personal productivity,” said the report. “These ‘prosumers’ intuitively understood GenAI’s capabilities and limits and became early champions of internally sanctioned solutions.”

The most effective AI-buying businesses also did not wait for perfect use cases or central approval. “Instead, they drive adoption through distributed experimentation, vendor partnerships and clear accountability,” continued the report. “These buyers are not just more eager; they are more strategically adaptive.”

Concerns among your customers of ending up with “more pilots than Lufthansa” are certainly justified, as are feelings of “seeing AI everywhere but in our P&L statement.” But customers can take heed; organizations that successfully cross the gen AI divide do three things differently, MIT researched advised. They buy rather than build, empower individuals and line managers rather than central labs, and they select tools that integrate deeply while adapting over time.

“For organizations currently trapped on the wrong side,” they concluded, “the path forward is clear: stop investing in static tools that require constant prompting, start partnering with vendors who offer custom systems and focus on workflow integration over flashy demos.”